Calvin's AI Risk Management Framework

Introducing Our AI RMF

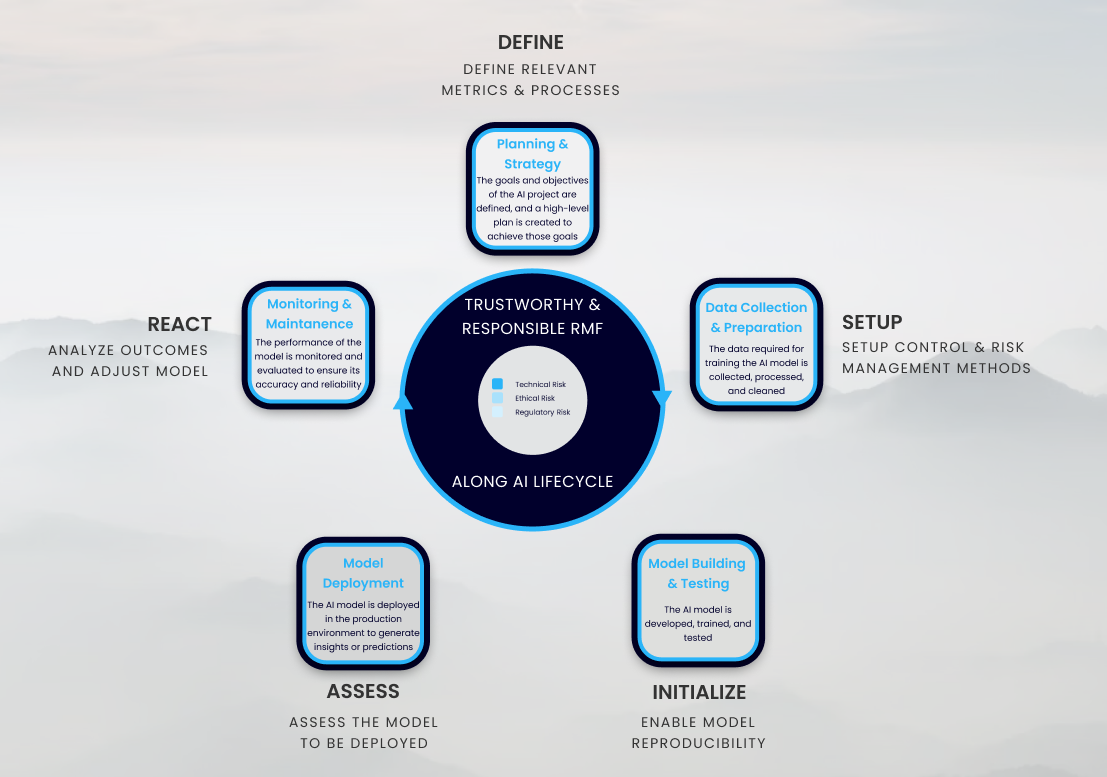

As artificial intelligence continues to transform our lives, it is important to ensure that the technology is developed and deployed in away that is trustworthy, safe, and compliant with laws, regulations, and standards. Proper risk management is a critical aspect of developing trustworthy AI and requires a combination of technical, ethical, and regulatory considerations. In this blog post, we will explore the key steps to creating trustworthy AI by using an appropriate risk management framework.

Calvin's AIRisk Management Framework (RMF) provides a risk-centric process that integrates these technical, ethical, and regulatory considerations across the AI lifecycle - from planning through development, deployment, and post-deployment activities. Our RMF is aligned with the US NIST's (National Institute of Standards and Technology) AI Risk Management Framework as well as the OECD report on “Governing and managing risks throughout the lifecycle for trustworthy AI” and provides five risk detection, mitigation, and minimization process step guidelines.

Typically, the first step in any AI lifecycle should be to create a plan and strategy for the AI project. This involves defining goals and planning how the project will be executed to achieve those goals. This includes identifying effective datasources, selecting appropriate algorithms, and setting the right success criteria. In the associated RMF process step "Define", the corresponding performance metrics are defined. In addition, apart from defining the technical quality measures, it is important not to neglect the process, accountability, and control sides. The different stakeholders responsible for the model (technical) or the use case (business) should be defined. All process steps in development, testing, release, maintenance, etc. should be clearly communicated and implemented. Finally, the necessary technical and business documentation as well as control through activity logging and monitoring should be introduced.

The second AI lifecycle often involves collecting and preparing data for analysis. The collected data is pre-processed by formatting, cleaning, and transforming it into a suitable scheme for the machine learning model to process. At the sametime, our second RMF step “Setup” has teams set up the control and monitoring methods that were defined in the first step. On top, it requires the setup of a continuous risk management method. This can be very complex to do off the cuff (especially since AI risk management involves newly emerging risks). However, there is a workaround. With our risk management platform Calvin, this step can be greatly simplified, as this step is already prepared for an AI-centric risk management approach. Once continuous risk management is in place, it is important to choose an appropriate definition of risk-based quality criteria for AI models and their use cases. Only when these are met can a model be deployed (or continue to be deployed).

Building and testing the AI model is next up in the AI lifecycle. The development step includes the design and training of the model, as well as testing performance metrics (which were chosen in the first RMF step “Define”) on a test and validation dataset. If performance is not up to the quality criteria (which were chosen in the second RMF step “Setup”), the model needs to be optimized until the values of the measures can be improved. Once this has happened it is crucial to ensure the reproducibility of the developed model in a consistent model and data format. This last reproducibility part is an RMF step we call “Initialize”.

Having ensured the quality and reproducibility of the model, it is now time to deploy the model in a production environment. However, before deploying the model, it is essential to assess the technical (part of which has already been completed in the previous step), ethical and legal risks. It is important to perform an assessment of the robustness of the model in the new production environment in this step. This RMF "Assess" step should initially be performed prior to the initial roll-out of the model. In addition, it is advisable to perform the risk assessment - or at least a portion of it - each time the AI system is re-modeled or re-trained. Finally, if monitoring of the system reveals a deterioration in risk indicators over time, additional risk assessment and possibly adjustment of the model is required. Again, the Calvin Risk management platform helps to effectively assess key AI-specific risks and makes this step a breeze.

Lastly, a big part of the AI lifecycle is monitoring and maintaining the model to ensure its reliability in the field. Reacting to changes in the production environment and in case there have been incidents in which the model has misperformed, conducting a comprehensive root-cause analysis into the causes is key to a sustainable path towards trustworthy, safe, and compliant AI. We call this RMF stage “React”. Identifying and addressing any issues that arise, such as a concept or data drift, model decay, or changing business needs and technology trends is the biggest priority here. It may involve retraining the model with new data, updating the algorithm or hyperparameters, or even replacing the model entirely with a more suitable one. Key in the RMF step is the goal to minimize risks by adjusting whatever needs adjustment. This might not only include the model setup but could include processes or standards as well. Since this is a cycle “React” can trigger all the other RMF steps once again from re-“Define” to re-“Assess”.

.png)